Amazon ECS vs EKS: Comparing AWS Container Platforms

TL'DR

Business teams have always demanded their Product Engineering teams ensure applications could be scaled up or down quickly and securely while being cost-conscious at the same time.

Containers, in that sense, are a great tool to achieve this. They provide a standardized way to package the application code, configurations, and dependencies in a single object. Most importantly, the virtualization happens at the OS and not at the hardware stack level. So they are faster and use less memory than VMs.

What is Container Orchestration?

Since the advent of container orchestration, the landscape of application development has fundamentally shifted in how applications are developed,deployed and managed. Container orchestration is the process of automating the management of scaling, deploying, and operating application containers across clusters.

This process ensures that containers run as intended, with appropriate resource allocation, and allows for easy scaling based on demand. The widespread adoption of container orchestration, especially in dynamic cloud environments, highlights the growing need for efficient container management.

While managing a single container on one host is relatively straightforward, juggling multiple containers across various hosts can however become impractical. Orchestration tools address these complexities by ensuring that the right containers are running at the right times and are adequately resourced. They also provide proper isolation for security and efficiency, enabling organizations to focus on development without getting caught in operational challenges.

Key Advantages of Container Orchestration

Scalability: Can easily upsize or downsize the number of containers based on traffic demands, ensuring optimal performance during peak times.

Handling Downtime: Ensures that applications remain accessible even if individual containers or hosts fail, by distributing workloads.

Provisioning: Avoid over-provisioning and under-utilization of hardware resources, leading to significant cost reductions. By optimizing resource allocation, you can ensure that the infrastructure is used efficiently, maximizing performance while minimizing waste.

Load Balancing: Distributes incoming traffic evenly across containers, enhancing performance and user experience.

Automated Deployment and Updates: Streamlines the deployment of new application versions and updates, supporting continuous integration and continuous deployment (CI/CD) practices.

How Container Management Works

Container management begins with the creation and configuration of containers. Each container is equipped with the essential components or an executable package needed to run an application—such as application code, runtime, system tools, and libraries—ensuring a consistent application environment across various deployment scenarios.

Once the containers are created, the focus shifts to orchestration. This involves automating the deployment and operation of containers, managing their scheduling across clusters. Orchestration tools make intelligent decisions about where to run containers based on resource availability, constraints, and inter-container dependencies.

Managing many containers at scale, otherwise called "container orchestration," could be a great source of confusion. In this blog, we feature two widely used container orchestration services - Amazon ECS and Amazon EKS. We will pit them against each other to determine which one is best suited for your organization.

Amazon Elastic Container Service (ECS)

Amazon Elastic Container Service (Amazon ECS) is a high-performance container orchestration service that allows easy running and scaling of containerized AWS applications.

ECS performs at scale. ECS offers high availability, security, and deep integration with a variety of AWS services, like Elastic Load Balancing, Amazon VPC, AWS IAM, and more. Additionally, Amazon ECS features AWS Fargate to deploy containers without provisioning servers, thereby reducing management overheads.

AWS Elastic Kubernetes Service (EKS)

Amazon Elastic Container Service for Kubernetes (Amazon EKS) makes it easy to deploy, manage, and scale containerized applications using Kubernetes on AWS and on-premise servers. All applications managed by Amazon EKS are fully compatible with applications managed by any standard Kubernetes environment.

A few argue that Amazon ECS is simpler to use and provides better security due to its IAM integration. Others state that Amazon EKS is far more versatile, as it leverages Kubernetes, an open-source tool. We have worked on a detailed comparison to help you make a nuanced decision.

Amazon ECS vs Amazon EKS

Managing Controls

Both ECS and EKS are AWS managed control services. Both of them manage tasks and pods with high availability and reliability.

Monitoring and Container Platform Resource Management

Both platforms are container orchestrators with in-built monitoring that restarts containers when they stop working correctly or exceed their capacity limits. It also allows users to specify a few resource constraints (like CPU and memory), which allows for more informed scheduling and resource management.

AWS Fargate

Both ECS and EKS have AWS Fargate support, a serverless compute engine for containers, making it easy for developers to focus on building applications.

It provides on-demand, right-sized compute capacity for containers that run as Kubernetes pods/service tasks as part of an Amazon EKS cluster/ECS cluster. Using Fargate, Kubernetes pods/ECS cluster service tasks run with the compute capacity they request. Each pod/service task runs in its VM-isolated environment without sharing resources with other pods/tasks.

Infrastructure Compatibility

ECS is an AWS service with a central container platform that runs as a service with its task definitions. It does not have the infrastructure compatibility support like EKS.

EKS is a Kubernetes-as-a-service offering for AWS, and it can be run on any infrastructure, meaning it is much easier to run on-premises or with a different service provider.

Security and Accessibility

Amazon ECS is deeply integrated with IAM, enabling customers to assign granular access permissions for each container. Using IAM, customers can restrict each service's access and delegate resources that a container can access.

EKS can support a higher number of running pods (containers) per EC2 worker than ECS because it uses ENIs. Although IAM roles cannot be applied natively to pods in EKS, one could leverage add-ons like KIAM that enable this functionality.

Cloud Integrations and Automation

ECS provides in-built integrations with AWS services for automatically managing targets on an existing load balancer, depending on where the containers are running. It provides feasibility for setting up the load balancer. Also, ECS automatically forwards container logs to CloudWatch Logs streams.

Kubernetes also features built-in integration with AWS load balancers. It can create and destroy load balancers on the fly. By deploying external DNS into the K8s cluster, we can achieve integration with Route53 or other DNS providers. With Fluentd, you can send your K8s cluster logs to CloudWatch Logs. AWS recently launched a method to integrate fine-grained IAM policies to pods via service accounts in Kubernetes clusters, without the need to distribute keys. Additionally, Kubernetes also has built-in integrations with AWS EBS volume, which automates the lifecycle of volumes depending on a workload request.

Namespaces

ECS does not have the namespaces concept. Nevertheless, Kubernetes has this feature. It isolates workloads running in the same cluster. While it may seem like an insignificant feature, it offers many advantages. For example, we could have staging and production namespaces running in the same cluster while sharing resources across environments, reducing spare capacity in the clusters.

Simplicity

ECS is a more straightforward platform. It does not have as many moving parts, components, and functionalities as Kubernetes does. With ECS, AWS targets primary workloads with simple architectures that need just some CPU and memory to run and will not evolve all that much over time. ECS comes with better AWS integration out of the box, and it requires little maintenance.

EKS requires more skill to operate at high standards. One must follow best practices to utilize all the benefits it offers.

Pricing

The Amazon ECS control plane is free of charge, regardless of how many clusters it runs. However, the EKS control plane has hourly-based pricing (i.e., $0.10 per hour for each AWS EKS cluster you create).

ECS vs EKS

AWS ecosystem offers two primary container orchestration solutions: AWS Elastic Container Service (ECS) and AWS Elastic Kubernetes Service (EKS). Let’s deep dive into each of them.

Amazon Elastic Container Service (Amazon ECS)

Amazon Elastic Container Service (Amazon ECS) is a high-performance container orchestration service that allows easy running and scaling of containerized AWS applications.

ECS performs at scale. ECS offers high availability, security, and deep integration with a variety of AWS services, like Elastic Load Balancing, Amazon VPC, AWS IAM, and more. Additionally, Amazon ECS features AWS Fargate to deploy containers without provisioning servers, thereby reducing management overheads.

Amazon Elastic Container Service for Kubernetes (Amazon EKS)

Amazon Elastic Container Service for Kubernetes (Amazon EKS) makes it easy to deploy, manage, and scale containerized applications using Kubernetes on AWS and on-premise servers. All applications managed by Amazon EKS are fully compatible with applications managed by any standard Kubernetes environment.

A few argue that Amazon ECS is simpler to use and provides better security due to its IAM integration. Others state that Amazon EKS is far more versatile, as it leverages Kubernetes, an open-source tool.

We have worked on a detailed comparison to help you make a nuanced decision.

Managing Controls

Both EECS and EKS are AWS managed control services. Both of them manage tasks and pods with high availability and reliability.

Monitoring and Container Platform Resource Management

Both platforms are container orchestrators with in-built monitoring that restarts containers when they stop working correctly or exceed their capacity limits. It also allows users to specify a few resource constraints (like CPU and memory), which allows for more informed scheduling and resource management.

AWS Fargate

Both ECS and EKS have AWS Fargate support, a serverless compute engine for containers, making it easy for developers to focus on building applications.

It provides on-demand, right-sized compute capacity for containers that run as Kubernetes pods/service tasks as part of an Amazon EKS cluster/ECS cluster. Using Fargate, Kubernetes pods/ECS cluster service tasks run with the compute capacity they request. Each pod/service task runs in its VM-isolated environment without sharing resources with other pods/tasks.

Cloud Integrations and Automation

ECS provides in-built integrations with AWS services for automatically managing targets on an existing load balancer, depending on where the containers are running. It provides feasibility for setting up the load balancer. Also, ECS automatically forwards container logs to CloudWatch Logs streams.

Kubernetes also features built-in integration with AWS load balancers. It can create and destroy load balancers on the fly. By deploying external-DNS into the K8s cluster, we can achieve integration with Route53 or other DNS providers. With Fluentd, you can send your K8s cluster logs to CloudWatch Logs. AWS recently launched a method to integrate fine-grained IAM policies to pods via service accounts in Kubernetes clusters, without the need to distribute keys. Additionally, Kubernetes also has built-in integrations with AWS EBS volume, which automates the lifecycle of volumes depending on a workload request.

So, where do each of them fit?

You should consider Amazon ECS if you are looking to work solely in the AWS cloud.

Amazon EKS offers more versatility to run container deployments across multiple infrastructure providers and provides additional flexibility provided by Kubernetes.

Our Key Notes

In our experience, ECS quickly proves to be highly limiting when the application starts to grow in complexity and size. So we need to understand a project's growth expectations before making the initial decision of which container orchestration to choose. Most SaaS workloads we have come across quickly outgrow ECS.

Managing Amazon EKS and ECS Together: A few high-level Key Points

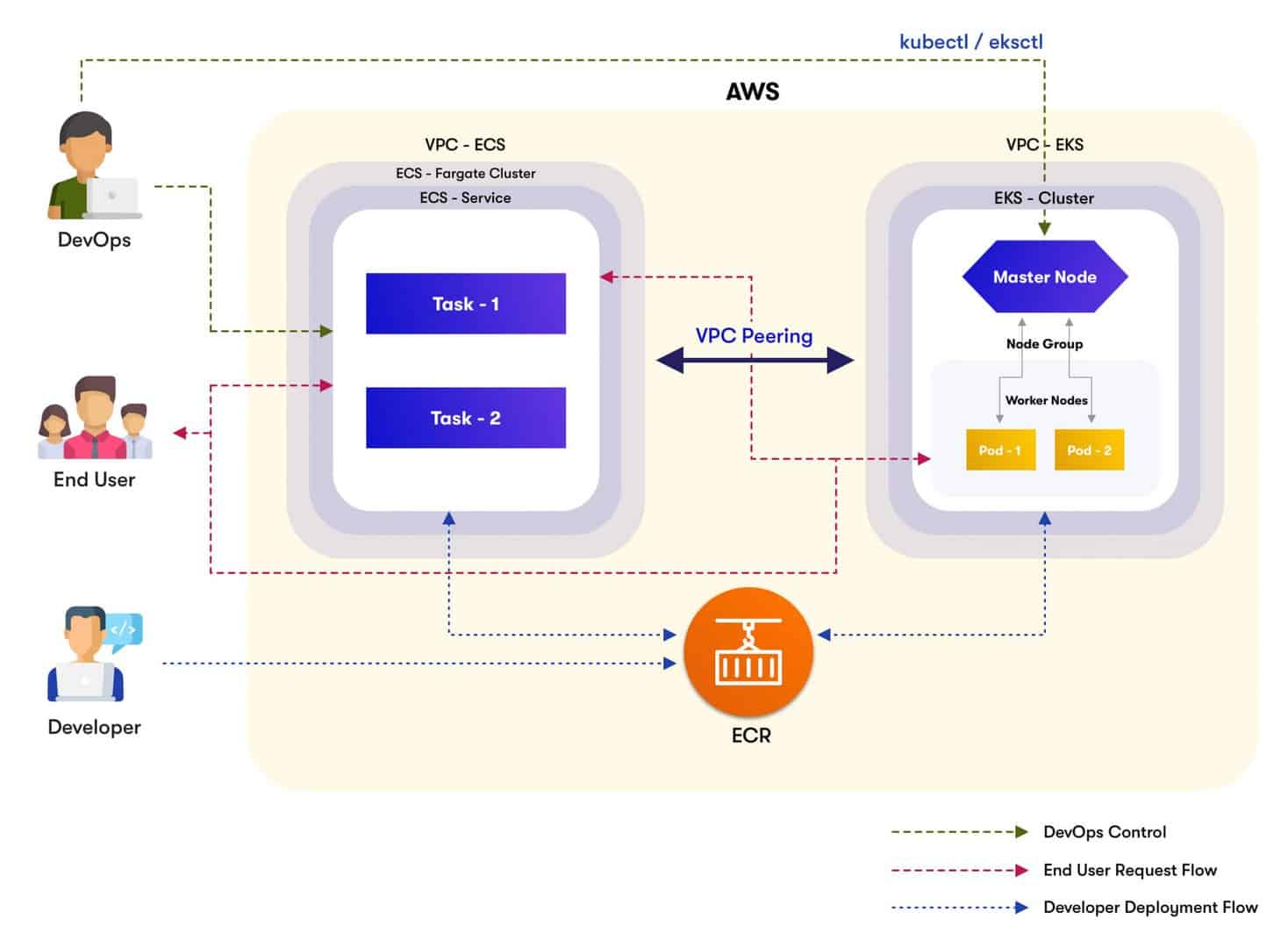

Let us consider that we have applications managed by both Amazon EKS and ECS. To manage them and their connectives, we can either completely migrate them into one or connect them internally.

We have tested this. Without any significant changes in architecture and migration activities, we can internally connect existing applications in ECS and EKS. By the way, did you know that you could connect multiple AWS accounts and their different VPC via VPC peering?

Observability

- For both Amazon ECS and EKS, Cloudwatch Metrics provides CPU and memory utilization of services/pods. If we need high-level monitoring, we can enable CloudWatch container insights.

- CloudWatch Container Insights is a key concept common for both ECS & EKS. It aggregates and summarizes metrics and logs from containerized applications and microservices; to provide high-level performance metrics (i.e., CPU, memory, network, node metrics, etc.). Container Insights is available for Amazon ECS, Amazon EKS, and Kubernetes platforms on Amazon EC2.

- Cloudtrail and Cluster Dashboard features a timeline that tracks cluster-change events like deployment, scaling, and descaling.

- For fault detection, we can set up AWS Cloudwatch alarms with thresholds. With its "failure/ok" status notifications, we could initiate timely action when necessary.

Operational Support

- Fargate is an excellent option when we consider operational support and cost.

- The Fargate Cluster Service and Fargate Worker Node Group could be chosen in ECS and EKS, respectively. They provide a serverless compute engine for containers. It makes it easy for developers to focus on building applications; while allowing AWS to take care of resource and capacity allocations for scaling and cluster optimization.

- It provides on-demand, right-sized compute capacity for containers that run as Kubernetes pods/service tasks as part of an Amazon EKS/ECS cluster. Using Fargate, Kubernetes pods/ECS cluster service tasks run with the compute capacity they request, and each pod/service runs tasks on its own.

- For other cluster types/on-demand instances, we have to set up autoscaling on both EC2 and service tasks/pods levels.

We hope you found this blog useful. We would love to hear your feedback and comments below.

Optimize Your Container Management with Ideas2IT!

Effective container management is essential for maximizing efficiency, scalability, and security in application development. By leveraging our expertise, you can streamline your processes, reduce operational overhead, and ensure that your applications run smoothly across various environments.

Ready to transform your container management strategy with Ideas2it? Glance at our custom application development services to learn more about how we can help you enhance your application development with tailored container solutions. Don’t wait—take the first step towards optimization today!

%20Hybrid%20Cloud%20Strategies%20for%20Modernizing%20Legacy%20Applications.avif)

%20Application%20Containerization_%20How%20To%20Streamline%20Your%20Development.avif)