AWS HIPAA Compliance for Healthcare Data Security

TL'DR

Gone are the days when healthcare organizations would build their data infrastructure only on on-premise infrastructure. Given the advantages of the cloud, Healthcare organizations need to have a strong cloud strategy that leverages the cloud’s strengths yet be secure and compliant. Let's explore this topic in detail.

What is HIPAA?

The Health Insurance Portability and Accountability Act (HIPAA) was introduced in 1996. With it, national standards were established to protect individuals' medical records and personal health information. It requires appropriate administrative, physical, and technical safeguards to protect the confidentiality, integrity, and availability of electronic protected health information (ePHI).

Covered Entities and Business Associates as per HIPAA: The Definition

According to HIPAA, a covered entity is a healthcare provider, a health plan, or a healthcare clearinghouse.

A business associate is a person or entity who performs or assists in performing an activity regulated by the associated HIPAA rules, for or on behalf of the covered entity.

So if a covered entity or business associate engages a cloud service provider (CSP) like AWS to store or process ePHI, AWS itself is a business associate under HIPAA.

It is important for covered entities moving to a public cloud environment to understand this distinction. Because a Business Associate Agreement (BAA) should then be enacted to define both privacy and security responsibilities of the covered entity and the business associate.

The Business Associate Agreement (BAA)

HIPAA requires a BAA between the covered entity and a business associate. These agreements serve to define and limit the permissible uses and disclosures of ePHI, as appropriate.

Though AWS's services can be used with healthcare applications, only services covered by the AWS BAA can be used to store, process, or transmit ePHI. AWS offers its customers the option to review, accept, and check the status of the BAA through a self-service portal.

The other CSPs that sign a BAA and therefore are considered HIPAA compliant are Google, Microsoft, Box, and Dropbox.

Post-BAA Steps: Risk Assessment, Security Controls, and Compliance Measures

Risk Assessment and Policy Design

Post signing a BAA and before using a cloud service, covered entities should conduct a comprehensive risk assessment exercise. And design particular policies and procedures to mitigate the risks identified.

Assessing Security and Access Controls

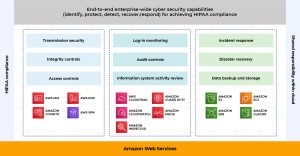

The covered entity must also assess the Security and Access controls. So that only authorized individuals can access the cloud-stored data. Effective controls across an organization’s security infrastructure are imperative for creating a well-architected end-to-end security posture. The goal for architects and developers is to create an infrastructure capable of withstanding potential cyberattacks. Once again, controls should align with safeguards documented within the HIPAA Security Rule.

HIPAA Privacy and Security Rules

Even though a cloud drive may meet the HIPAA Security Rule requirements, covered entities must ensure they also comply with the HIPAA Privacy Rule too. Under HIPAA, covered entities should implement policies and procedures before granting access to PHI. Authorization should be accomplished by obtaining permissions that are dictated by policies and then applying these to users via role mapping or group membership. A strategy for creating policies and assigning them to users is required to grant administrators the rights they need to perform their job functions while upholding a “least-privilege” approach. When a third-party identity provider is used, applying policies to users is achieved with roles.

Emergency Access and Contingency Planning

Under HIPAA, covered entities must meet the Emergency Access Procedure requirement, which includes the need for availability in any HIPAA compliant environment. To meet this requirement, covered entities must enable administrative controls, such as a data backup and disaster recovery plan. This contingency plan for protecting data in the event of a disaster should focus on the creation and maintenance of retrievable, exact copies of ePHI. This involves maintaining highly available systems, keeping both the data and system replicated off-site, and enabling continuous access to both. In addition, implementing and testing Identity & Access management controls must be accounted for within the contingency plan. Secure authorization and authentication must be enabled, even during times where emergency access to ePHI is needed.

Auditing, Monitoring, and Encryption

Auditing and monitoring controls are essential to meeting the requirements of the HIPAA Security Rule. Auditing controls are technical safeguards that should be addressed through technical controls by anyone who wishes to store, process, or transmit ePHI. Monitoring controls include procedures for monitoring log-ins and reporting discrepancies. In the event of an audit, the logs must be made available to the regulators.

The HIPAA Security Rule also includes addressable implementation specifications for the encryption of ePHI in transit, in use, and at rest. The encryption algorithms must meet NIST standards.

Here is a case study of how we built a HIPAA-compliant data lake for our client

Overview

The client is a leading Healthcare organization operating out of 50+ locations in the US. The client envisioned a Cloud-based application to handle their medical and diagnostic information (EHR), complying with HIPAA at every step of the process.

Ensuring HIPAA Compliance

For the project, we ensured HIPAA compliance at every stage. We prioritized data privacy and security through the following.

BAA Execution

We worked with our customer and executed a standardized BAA that would allow them to use any AWS service in an account designated as a HIPAA Account. We also ensured that we only process, store, and transmit PHI using the HIPAA-eligible services defined in the AWS BAA.

Data Encryption (At Rest)

Database: Encryption was enabled for Amazon RDS DB instances and snapshots. The data encrypted included the underlying storage for DB instances, automated backups, read replicas, and snapshots. We used an AES-256 encryption algorithm to encrypt the data on the server that hosts the Amazon RDS DB instances. Amazon RDS handled authentication of access and decryption of data transparently with a minimal impact on performance.

File Storage: The S3 default encryption provides a way to set the default encryption behavior for an S3 bucket to encrypt all objects stored in the bucket. Encryption was achieved on the server-side by either using Amazon S3-managed keys (SSE-S3) or AWS KMS-managed keys (SSE-KMS). Amazon S3 encrypts an object before saving it to disk in its Data Centers and decrypts it when you download the objects.

Encryption with AWS Key Management Service (KMS)

- Provides encryption for many AWS services

- Centralized key management and also lets us import our own keys

- Enforces automatic key rotation

- AWS CloudTrail facilitates visibility on any changes

Data Encryption (In-transit)

Securing data in transit: By default, all the data shared/transmitted between the services is encrypted while passing through HTTPS. Apart from that, we also further secure data in transit by employing the below mechanisms/tools:

- Network traffic industry-standard transport encryption mechanisms such as Transport Layer Security (TLS) or IPsec virtual private networks (VPNs)

- ELB with AWS Certificate Manager (ACM)

- Amazon CloudFront with ACM

- Amazon API Gateway with ACM

Security at all layers

Network layer

We leveraged AWS Virtual Private Clouds (VPC) since it offers the following advantages:

- Provides subnets to separate layers

- Leverages stateless network access control lists to prevent access between subnets

- Uses a routing table to control internet access with the instances

- Features security groups to protect instances from unauthorized network access

Host-based security

- We implemented host intrusion detection and prevention, which mandated us to use a configuration management tool.

- We also used an OS-based anti-malware + intrusion detection.

- We ran Common Vulnerabilities, and Exposures (CVE) scans using AWS Inspector and patch reported vulnerabilities.

- We conducted static code analysis using CheckMarx and fixed the reported issues. We typically recommend integrating Checkmarx into the CI/CD pipeline to allow the developers to be alerted of any new security vulnerabilities in real-time.

- We scanned all the external code or open-source code we used.

- We hardened the operating systems and default configurations.

- We maintained hardened and patched images.

General Practices

- We used AWS Shield service, which protects against most common network and transport layer DDoS attacks.

- We also used AWS WAF (Web application firewall), which prevents cross-site scripting, SQL injection, scanners & probes, bots, and scrapers.

- We used AWS Certificate Manager for managing SSL certificates.

- We leveraged tokenization to substitute sensitive data.

A few guidelines, strategies, and best practices we followed

- Decoupling protected data from processing/orchestration.

- Tracking data flows using automation.

- Having logical boundaries between protected and general workflows.

- Enabling MFA everywhere: Multi-Factor Authentication provides an essential level of security in any environment. Even if the password is shared or unintentionally issued, malicious users will be unable to access the account. This is critically important in HIPAA-compliant environments.

- Rotating credentials regularly: Any credentials that remain active for too long increase the risk of being compromised. As best practice too long increase the risk of being compromised. As best practice, periodically rotate all credentials, API access keys, and passwords. AWS outlines a manual process using the AWS CLI to create a second set of credentials, and programmatically generate and distribute the access keys.

- Turning off public access: We turn off public access for all the compute instances, databases, and storage. All these instances can be accessed only within the network using a private IP. We also add a secured bastion login host to facilitate command-line Secure Shell (SSH) access to EC2 instances for troubleshooting and systems administration activities.

- Granting the least privileges: As a best practice, we give the least privileges and then grant more in each specific case, as and when necessary.

- Implementing Audit Logging & Monitoring Controls: Enable Logging, monitoring, and alerts using AWS CloudTrail, Amazon CloudWatch, and AWS Config rules.

- Multi-AZ: Standard, external-facing Amazon Virtual Private Cloud (Amazon VPC) Multi-AZ architecture with separate subnets for different application tiers and private (back-end) subnets for application and database. The Multi-AZ architecture helps ensure high availability.

By implementing robust security measures, encryption practices, and compliance protocols, healthcare providers can leverage the cloud’s advantages while maintaining the privacy and integrity of sensitive health information.

To ensure your cloud strategy aligns with HIPAA requirements and to safeguard your data effectively, it’s crucial to partner with experts like us, who can guide you through the complexities of compliance and security.

Ready to build a secure and compliant cloud infrastructure for your healthcare organization? Contact us today to learn how we can help you navigate HIPAA regulations and create a robust, cloud-based solution tailored to your needs. Let’s ensure your data is protected while you focus on delivering exceptional care.

%20AI%20in%20Data%20Quality_%20Cleansing%2C%20Anomaly%20Detection%20%26%20Lineage.avif)