Top 8 Large Language Models Comparison: A Detailed Review

TL'DR

- LLMs drive efficiency, customer experience, and revenue.

- Choosing the wrong model can cause inefficiencies and complexity.

- Demand for LLMs will grow across 750M apps by 2025.

Large language models and natural language processing technologies are driving unprecedented innovation and growth in the Generative AI landscape. As enterprises across the globe rush to leverage these powerful tools, the market is becoming increasingly crowded with options, each promising to enhance efficiency and spur strategic advancements.

Amidst this surge in AI adoption, data leaders face the challenge of selecting the right LLM from a plethora of choices.

With a wide array of models available, making an informed decision is critical to maintaining a competitive edge. An ill-suited choice can lead to operational inefficiencies, underwhelming performance, and increased complexity.

A recent survey stated that by 2025, there will be 750 million apps using LLMs and will automate 50% of digital work. As enterprise leaders, it's crucial to take charge of your digital strategy and select an LLM that aligns with your specific business objectives and use cases. The right LLM can optimize operations, elevate customer experiences, and unlock new revenue streams.

In this blog, we will provide a comprehensive comparison of leading LLMs, helping you navigate the crowded market and choose the model that best fits your unique business needs.

Let’s explore the world of LLMs and discover how to make the most informed decision for your organization’s success.

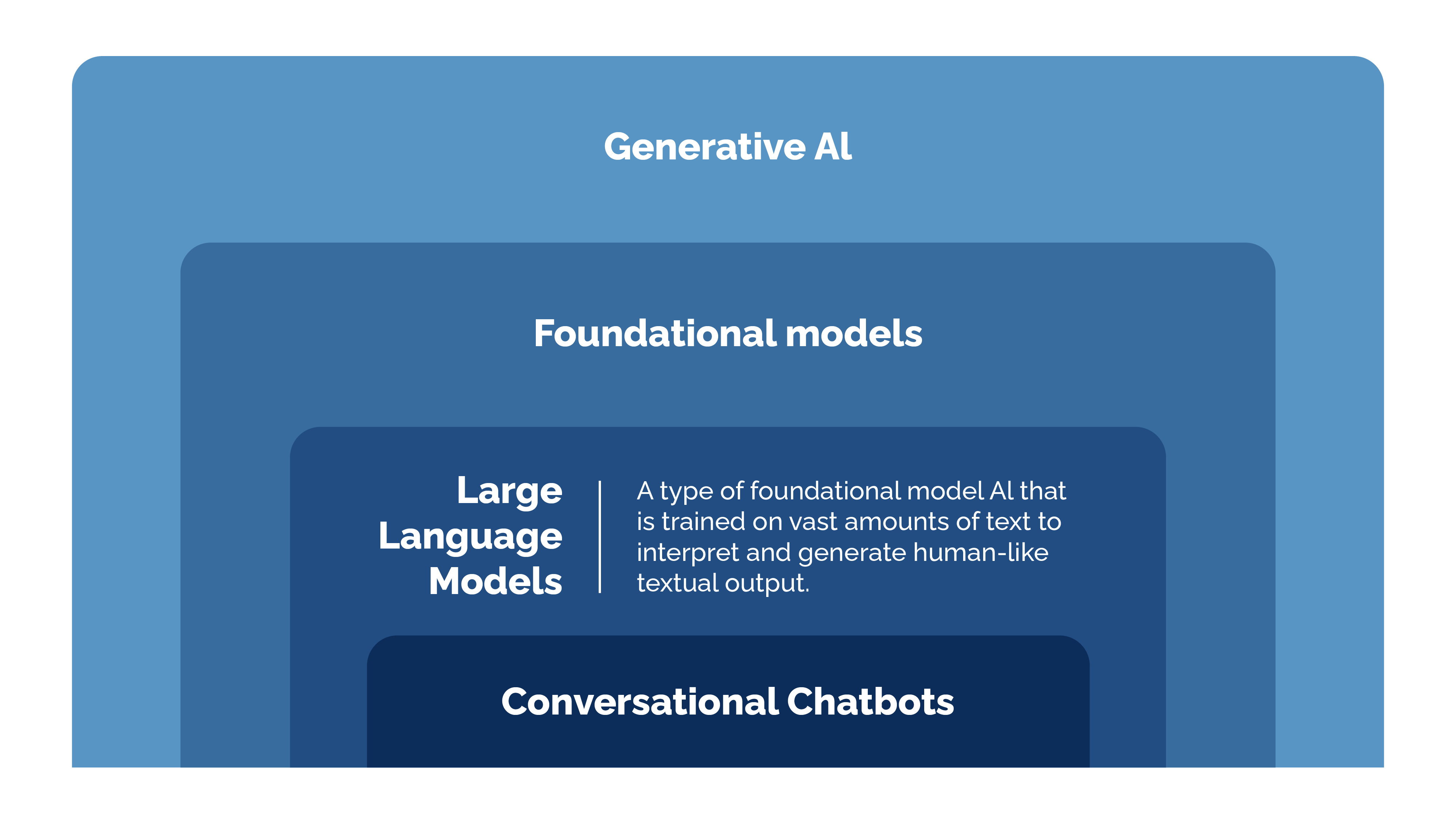

What Are Large Language Models (LLMs)?

A Large Language Model (LLM) is a sophisticated type of artificial intelligence that leverages transformer-based deep learning models like GPT or BERT to process and understand human language. Utilizing self-supervised and semi-supervised learning methods, these foundation models are trained on vast amounts of text data, allowing them to perform a range of language-related tasks like text generation, summarization, sentiment analysis, etc.

These capabilities are enabled by techniques such as:

- Tokenization

- Embeddings

- Self-attention mechanisms

- Transformer-based architectures

They help produce human-like language with remarkable accuracy.

Recent advancements in natural language processing (NLP) have introduced variations that use different architectures, such as recurrent neural networks or state space models. However, the majority of LLMs predominantly rely on deep learning methodologies.

This image will help you visually understand the positioning of LLMs within the Generative AI realm.

How do they work?

LLMs operate using deep learning techniques, primarily based on transformer architectures like GPT, which excel at processing sequential data such as text. These models are trained on massive datasets such as Common Crawl, Wikipedia, and BookCorpus, to predict the next word in a sentence based on the context provided by preceding words.

This process involves converting text into tokenized forms, which are then transformed into embeddings—numeric representations capturing the contextual meaning of words.

These steps typically include:

- Tokenization: Breaking down text into individual words or subwords

- Embeddings: Converting tokens into numerical vectors that preserve context

- Attention mechanisms: Prioritizing relevant words and phrases in the input

- Transformer processing: Generating output using multiple layers of contextual understanding

Transformers use attention mechanisms, such as self-attention, to focus on relevant parts of the

Organizations can effectively deploy LLMs for a range of language-related tasks with high accuracy and efficiency while safeguarding against potential risks by addressing biases, offensive content, and factual inaccuracies, often termed “hallucinations,” that may arise from large-scale unstructured data.

How to Choose the Right LLM for Your Business Use Case

Selecting the right LLM for your specific use case can significantly impact the success of your AI implementation. The right choice can take a significant leap in maximizing your results and benefits. Here’s a guide to help you make an informed decision:

%252520What%252520to%252520consider%252520when%252520choosing%252520an%252520LLM.png)

Business Alignment

To choose the right Large Language Model (LLM) for your needs, start by defining your business goals and specific use cases. Clearly articulate your use cases, whether it’s developing a conversational AI system, a text summarization tool, or a sentiment analysis application.

Here are common enterprise use cases for LLMs:

- Customer support automation through chatbots

- Summarizing legal, financial, or medical documents

- Social media sentiment tracking and brand monitoring

- Code generation or refactoring assistance

- Personalized content and product recommendations

Each use case has distinct requirements that direct you to a specific category of LLMs (e.g., instruction-tuned, multimodal, or domain-specific models)

Once your use cases are defined, establish Key Performance Indicators (KPIs) to evaluate and compare the effectiveness of different LLMs. Key performance indicators (KPIs) for evaluating LLMs include:

- Accuracy of responses

- Latency or response time

- Cost per 1,000 tokens or per API call

- Customer satisfaction or engagement metrics

Additionally, set KPIs for outcomes, such as customer satisfaction, to ensure that the model not only performs well technically but also meets business objectives.

Some LLMs may offer exceptional performance but require significant computational power, while others might provide a more balanced approach between performance and efficiency. By aligning your selection process with these factors, you can choose an LLM that best supports your specific business needs and operational constraints.

Technical Capabilities Evaluations

Based on the shortlisted LLMs, assess various criteria like model performances, computational requirements, and other finetuning and deployment options.

Check whether the LLM can seamlessly integrate with your existing systems and align with your cloud or on-premises preferences. Assess its ability to handle mass market adoption at scale—whether it can maintain consistent performance under increased demands.

Evaluate them using relevant benchmarks and datasets. Many LLM papers report results on standard NLP benchmarks such as GLUE, SuperGLUE, and BIG-bench, which offer a baseline for comparison.

Here are commonly used benchmarks for evaluating LLM performance:

- GLUE – Tests general language understanding

- SuperGLUE – Covers advanced reasoning and comprehension

- BIG-bench – Broadly measures AI general intelligence

- MMLU – Assesses academic knowledge across disciplines

In order to aim for optimal performance, it is recommended to fine-tune the LLM on specific tasks although the models are pre-trained on broad datasets.

Larger models generally offer superior performance but require more computational resources. Balance the trade-off between model size and resource availability based on your infrastructure.

Pro Tips:

For limited infrastructure or faster inference, smaller models like FLAN-T5 or DistilGPT work well. For higher reasoning power and enterprise-grade use cases, large models such as GPT-4 or Claude 2 are better suited.

Also, consider whether to use cloud APIs for convenient rapid prototyping or to self-host the LLM for greater control and flexibility, keeping in mind the associated costs and engineering efforts.

Cost & ROI

When selecting an LLM, carefully consider its pricing model, whether it’s pay-per-token, tiered subscription, or enterprise licensing, and make sure it aligns with your projected usage and scaling plans. Examine whether the model offers subscription-based or pay-per-use pricing, and account for any additional costs associated with scaling.

It's crucial to understand the total cost of ownership, which includes not only licensing fees but also expenses related to API usage, computational resources, maintenance, and customization. Some models might be accessible for free through platforms like ChatGPT for limited use, but costs can escalate with increased demand or usage.

When assessing the total cost of ownership (TCO), consider:

- Ongoing API or license fees

- Hosting and infrastructure expenses

- Cost of fine-tuning or custom training

- Engineering time for integration and monitoring

- Data privacy, compliance, and security measures

A comprehensive cost-benefit analysis will help you select an LLM that offers the best return on investment while adhering to any legislative and geopolitical boundaries relevant to your organization.

Support and Ethical Considerations

When selecting an LLM, consider the ongoing support and development of the model. Check whether there is an active community of experts and users contributing to its improvement and expanding its range of use cases.

Ethical considerations are also crucial. Choose models that offer ethical alignment features, including explainability, bias reduction methods like RLHF, and responsible AI practices

With the increasing focus on ethical AI, it’s important to select an LLM that not only adheres to current ethical standards but also aligns with emerging regulations governing AI use.

The Leading Large Language Models in the Market

Several advanced Large Language Models (LLMs) have significantly impacted the field of artificial intelligence (AI), each offering unique capabilities and advancements.

Based on the availability and accessibility of the models to the public, LLMs can be classified into Open source and Closed source models.

As the name suggests, open source models are those whose source code is publicly available and open for use, modification and distribution. Conversely, closed source models are ones whose source code is not publicly available and can be accessed only via an API. The code remains protected, modified and maintained by the developer company and often requires prior permission and license to use.

The choice between open-source and closed-source models often depends on factors such as the need for customization, control over the model, and budget considerations.

Here are some examples of leading Open Source and Closed Source models.

Open Source Models

- Llama 2: Meta AI’s model emphasizes safety and helpfulness in dialog tasks, aiming to enhance user trust and engagement.

- Vicuna: Facilitates AI research by providing a platform for easy comparison and evaluation of different LLMs through a question-and-answer format.

- FLAN-UL 2: Utilizing a Mixture-of-Denoisers (MoD) approach, FLAN-UL2 enhances its performance and delivers high-quality, contextually relevant outputs.

- Grok: xAI’s Grok provides engaging conversational experiences with real-time information access and unique features like taboo topic handling.

Closed Source Models

- GPT-4: Developed by OpenAI, GPT-4 represents a major leap in conversational AI with its multimodal capabilities and deep comprehension across various domains.

- Gemini: Introduced by Google DeepMind, Gemini is noted for its innovative multimodal approach and versatile model family designed for diverse computational needs.

- Claude 2: Developed by Anthropic, serves as a robust AI assistant, possessing proficiency in coding and reasoning.

- BloombergGPT: Created by Bloomberg, is a new large-scale generative AI model, specifically designed to tackle the complex landscape of the financial industry.

These models are at the forefront of AI innovation, contributing across diverse domains.

Also Read: AI Transformation: The New Enterprise Mandate

Top Large Language Models: A Comparative Analysis

%252520Top%252520Large%252520Language%252520Models_%252520A%252520Comparative%252520Analysis.png)

Key Analysis

- GPT-4 and Claude 2 provide high ROI and are widely adopted but are not open source and have limited multimodal capabilities.

- Gemini offers multimodal capabilities and is gaining significant traction, though it is not open source.

- LLama2 and Vicuna are open source and are influential in the research community.

- FLAN-UL2 is noted for its performance across various NLP tasks with increasing adoption.

- BloombergGPT focuses on financial applications and maintains a strong presence in that sector.

- Grok is a more niche model with moderate market penetration.

Let’s take a more detailed look at these LLMs and identify use cases, pros and cons.

1. GPT-4

Generative Pre-trained Transformer 4 (GPT-4) is the latest advancement in the GPT series developed by OpenAI. As the fourth iteration, GPT-4 represents a significant leap forward from its predecessors. This cutting-edge model is not only capable of processing text but also has the ability to handle images alongside text, demonstrating robust performance across a variety of tasks.

Pros:

GPT-4 excels in its multimodal capabilities, meaning it can interpret and generate both text and images, offering a more dynamic range of applications. It is equipped with extensive multilingual support, allowing it to understand and produce text in numerous languages. This broad linguistic capability enhances its usability and makes it accessible to a global audience.

Use Cases:

GPT-4's advanced contextual understanding and versatility significantly enhance the quality of interactions. It is adept at managing complex instructions with greater nuance, leading to more reliable and creative outputs.

Whether it's aiding in coding tasks, excelling in standardized tests like the SAT, LSAT, and the Uniform Bar Exam, or showcasing originality in creative thinking exercises, GPT-4 demonstrates a remarkable ability to handle a wide array of domains effectively.

2. Claude 2

Developed by Anthropic, Claude 2 represents the latest generation of AI assistants, grounded in Anthropic's research on creating systems that are helpful, honest, and harmless. This advanced LLM leverages reinforcement learning from human feedback (RLHF) to refine its output preferences, ensuring that the generated responses align with safety and ethical standards.

Claude 2 is accessible through both an API and a new public-facing beta website, making it widely available for various applications.

Pros:

Claude 2 excels in several areas, including advanced reasoning, mathematics, and coding. It is adept at generating diverse types of written content, summarizing existing materials, and assisting with research. It utilizes a transformer architecture -a neural network design known for effectively managing sequential information.

Claude 2 can understand context and produce appropriate responses based on the input data. This makes it a powerful tool for handling complex tasks.

Use Cases:

Claude 2 is well-suited for processing and analyzing extensive technical documentation or even entire books. It can generate code to support developers in their programming tasks and is capable of managing large volumes of information for research and data analysis. Its ability to handle and retrieve information efficiently makes it valuable for studies, data analytics, and other information-intensive activities.

3. Llama 2

Llama 2, developed from pretraining on publicly available online data sources, has been further refined with Llama Chat. This fine-tuned version benefits from additional instruction datasets and over 1 million human annotations, and it employs reinforcement learning from human feedback to enhance its safety and usefulness.

While Llama 2 itself is not specifically optimized for chat or Q&A scenarios, it can be effectively prompted to continue text naturally based on the given input.

Pros:

Llama 2 is available for both research and commercial use at no cost, making it an accessible tool for a wide range of applications. Its enhanced capabilities and efficiency make it valuable for individual and professional users alike.

Use Cases:

Llama 2 excels at generating diverse types of content, including blog posts, articles, stories, poems, novels, and even scripts for YouTube or social media. By inputting a few words or sentences, users can prompt Llama 2 to create new, unique text based on its training data.

The model is also effective at condensing lengthy texts into concise summaries while preserving critical information, making it a useful tool for digesting large volumes of content. Llama 2 can enhance and expand existing sentences or paragraphs, adding depth and additional content.

Its natural language processing capabilities allow it to improve the quality and completeness of written material.

4. Flan - UL2

FLAN-UL2 is a cutting-edge open-source model now available on Hugging Face, released under the Apache license. It represents an advancement over its predecessors, FLAN-T5 and traditional UL2 models, by enhancing the usability of the original UL2 framework.

Developed by Google, FLAN-UL2 introduces the Unifying Language Learning Paradigms (ULT) approach, which integrates a diverse range of pre-training objectives through a technique known as Mixture-of-Denoisers (MoD).

This innovative method blends various pre-training paradigms to improve model performance across different datasets and configurations.

Pros:

Completely open source, making it accessible for widespread use and research. Demonstrates exceptional performance on benchmarks such as MMLU and Big-Bench-Hard, positioning it as one of the top models in its category.

Use Cases:

FLAN-UL2 is versatile and effective across a wide range of tasks, including language generation , comprehension and text classification. The model can also be implemented for question answering, commonsense reasoning and structured knowledge grounding and data retrieval.

Its few-shot learning capabilities also make FLAN-UL2 a promising tool for exploring in-context learning and zero-shot task generalization in research.

5. Grok

Grok is an innovative chatbot developed by Elon Musk’s startup, xAI. This versatile conversational AI is designed to facilitate both serious and light-hearted discussions, catering to a diverse range of users, from researchers to creative professionals. With its engaging and intelligent interactions, Grok goes beyond traditional AI experiences.

The latest release, Grok 1.5V, marks a significant advancement as xAI’s first multimodal model, capable of advanced visual processing. It has been recognized for its strong performance in coding, math, and its minimal censorship, allowing it to address controversial topics with ease.

Pros:

A standout feature of Grok 1.5V is its ability to transform logical diagrams into executable code, simplifying the programming process and enabling users to generate code without deep knowledge of programming languages. Grok 1.5V excels in the RealWorldQA benchmark, which assesses real-world spatial understanding, demonstrating superior capabilities compared to its peers.

Use Cases:

Grok’s ability to convert logical diagrams into code makes programming accessible to individuals with a strong logical foundation, bypassing the need for in-depth language knowledge and allowing users to start building projects immediately. Unlike many AI models that struggle with accurate calculations, Grok 1.5V extracts information from images to perform precise mathematical computations, enhancing its reliability in practical applications.

Grok's depth in understanding images is demonstrated through its ability to interpret simple drawings and generate stories based on the inferred elements. This highlights its advanced visual processing and narrative generation capabilities.

6. Gemini

Gemini is a suite of generative AI models developed by Google DeepMind, designed to excel in multimodal applications. Unlike traditional text-only LLMs, Gemini integrates various types of data, such as images, charts, and videos, to provide a more comprehensive understanding of tasks.

Trained and fine-tuned from the ground up on multimodal datasets, Gemini stands out for its ability to seamlessly combine information from different modalities, offering a cohesive grasp of complex contexts.

Pros:

Gemini's advanced capabilities in processing and understanding multiple types of data simultaneously set it apart from conventional models. The model demonstrates sophisticated general-purpose language understanding.

It excels at extracting detailed information through nuanced reasoning across various data types.

Use Cases:

Developers can fine-tune Gemini with enterprise-specific data and incorporate it into applications. This enables the creation of advanced tools like intelligent search engines and virtual agents that handle a wide array of multimodal tasks effectively.

Gemini’s robust reasoning abilities allow for rapid analysis of extensive documents, facilitating efficient extraction of meaningful insights. This significantly speeds up the process of uncovering valuable information compared to traditional manual methods.

7. BloombergGPT

BloombergGPT is a specialized large language model (LLM) that has been meticulously trained on extensive financial data to enhance natural language processing (NLP) tasks within the financial sector. Recognizing the complexity and unique terminology of the financial industry, BloombergGPT represents a pioneering advancement tailored specifically for this domain.

This model is set to enhance Bloomberg’s existing NLP capabilities, including sentiment analysis, named entity recognition, news classification, and question answering.

Pros:

BloombergGPT significantly outperforms other models of similar size on financial NLP tasks while maintaining strong performance on general LLM benchmarks.

Use Cases:

BloombergGPT can analyze market trends, historical data, and risk factors to assist in optimizing investment portfolios, aiming to maximize returns. The model can be utilized to identify fraudulent activities by analyzing transaction data and spotting irregularities.

BloombergGPT can evaluate market sentiment by analyzing news, social media, and financial reports, providing valuable insights for investment strategies.

8. Vicuna

Vicuna, developed by a collaborative team from UC Berkeley, CMU, Stanford, and UC San Diego, is an open-source chatbot with 13 billion parameters.

As one of the pioneering open-source large language models, Vicuna is distinguished by its training on human-generated data, which enables it to produce coherent and creative text. It represents an enhancement over the Alpaca model, leveraging the Transformer architecture and being fine-tuned on a dataset of human conversations.

This makes Vicuna an invaluable resource for developing advanced chatbots and for researchers exploring large language models. Its introduction marks a significant advancement in natural language processing, making sophisticated language models more accessible to the public.

Pros:

Vicuna generates more detailed and well-structured responses compared to Alpaca, with performance levels comparable to ChatGPT. It features an intuitive interface, allowing users to interact with the model, input prompts, and obtain desired outputs without needing extensive coding knowledge.

Vicuna is designed to integrate smoothly with existing tools and platforms. Whether you're using Python, Java, or another programming language, incorporating Vicuna into your workflow is straightforward.

Use Cases:

Vicuna is ideal for crafting blog posts, brainstorming book ideas, or generating creative text. It provides coherent, relevant, and tailored content to meet your specific needs. It excels at answering queries and retrieving relevant information, making it a valuable tool for obtaining accurate answers and resources.

Vicuna can handle large volumes of data, extracting key insights and summarizing them in a clear and concise manner, helping to manage data overload efficiently.

Our AI experts at Ideas2IT can help you benchmark, compare, and integrate the ideal model, tailored to your goals. Talk to an LLM Consultant

Discover the Perfect LLMs for Your Business Needs – Let Us Help You Find the Right Solution!

These comparisons are just the tip of the iceberg; the landscape of model comparisons is vast and continually evolving. Navigating this complex field requires expert guidance to make informed decisions tailored to your specific project needs, which is where Ideas2IT excels.

Our team of data leaders excels in helping you visualize and articulate your generative AI requirements. We provide comprehensive support from initial analysis through to implementation and ongoing maintenance. With our expert guidance and extensive experience, we will help you establish a strong competitive advantage and become a market leader in your domain.

Looking to find the perfect LLM solution for your specific business needs? Reach out to us today and see how we can help you!

.avif)

.png)

.png)

.png)

.png)

.jpeg)