In the modern world, the data for healthcare organizations are channeled from multiple sources like EHR, patient portals, billing systems, and more. It has become inevitable for these organizations to migrate, transform and collate these data from different sources for Data Analytics. By adopting the latest technologies available in the market, this process could be simplified to a greater extent.

Mirth is a popular Solution for Integrating Healthcare Data. While Mirth provides a wealth of in-built functionality, it has problems with scalability and performance. Recently, we modernized a Healthcare data ingestion platform for one of our customers from Mirth to a cloud-native solution using Apache Spark. In this blog, we walk you through how we did this and the performance improvements that followed.

What Is Apache Spark?

Apache Spark is an open-source, distributed multi language computing system designed for big data processing and analytics on single-node machines or clusters. It provides a fast and flexible framework for processing large datasets across clusters of computers. Key features include:

- Speed: Spark is known for its in-memory processing capabilities, allowing it to perform tasks much faster than traditional disk-based systems like Hadoop MapReduce.

- Ease of Use: It offers high-level APIs in languages like Python, Java, Scala, and R, making it accessible to developers with different programming backgrounds.

- Unified Engine: Spark supports various workloads, including batch processing, stream processing, machine learning, and graph processing, all within the same framework.

- Rich Ecosystem: It integrates with various data sources, such as HDFS, Apache Cassandra, and Amazon S3, and has libraries for machine learning (MLlib), graph processing (GraphX), and SQL querying (Spark SQL).

Challenges Using Mirth for Large Dataset Processing

- With parsing, validation, and transformation functions in place for every field of input, when the data size is in GBs, Mirth can take nearly a day to process the data, causing significant delays.

- It is hard to achieve better scalability with Mirth when the input data size varies (say from 100 MB to 100 GB). The solution should be capable of throttling the resources based on the load.”

- It is difficult to orchestrate and monitor the entire data pipeline using Mirth.

To overcome the above-mentioned challenges, Mirth should be replaced by Spark for faster and more efficient data processing. A cloud-based integration is recommended for such solutions with high data processing needs.

Before embarking on the cloud-based integration, let's delve deep to fully understand the Mirth framework.

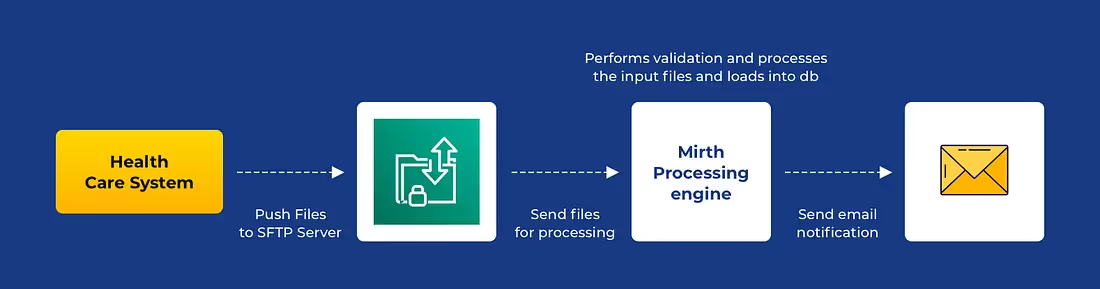

Understanding Mirth Architecture

- The healthcare payer-related information is generated as an Inbound Recipient File (space-delimited text files with fixed-width strings). The inbound files are transferred to the SFTP server

- The inbound files are transferred to the SFTP server

- The role of the processing engine is to parse and transform the input feed. In the existing architecture, Mirth is used as the processing engine. The role of the Mirth processing engine is as follows:

- Parse the input fixed-length text files.

- Validate each field in the inbound recipient file (validating string length, string format, etc.).

- Apply transformation to the fields (converting date time to specific formats, generating unique IDs, trimming, etc.).

- Create success and failure flat files:

- The success flat file contains the rows which have passed all the validations.

- The failure flat file contains rows if any of the fields failed on the validation step.

- Update EVV tables with the success and failure information.

- Update the healthcare payer database tables with the success and failure rows.

Sample Input Format

The recipient Inbound file will be in the form of a text file with a fixed-length sequence.

2692 CA First Street California 11111111111 Fax: 1111111111 Female 04/04/1950 Monica Latte 4444 Coffee Ave Chocolate, California 90011 Carl Savem Female Divorced English DIABETES MELLITUS 0 0 0 0 0 CA4932 250

A data dictionary has been provided to parse and identify the column information from the above-mentioned fixed-length strings.

For example, the first 4 letters correspond to Source System (2692) — i.e., the next 2 letters correspond to Jurisdiction (CA), etc.

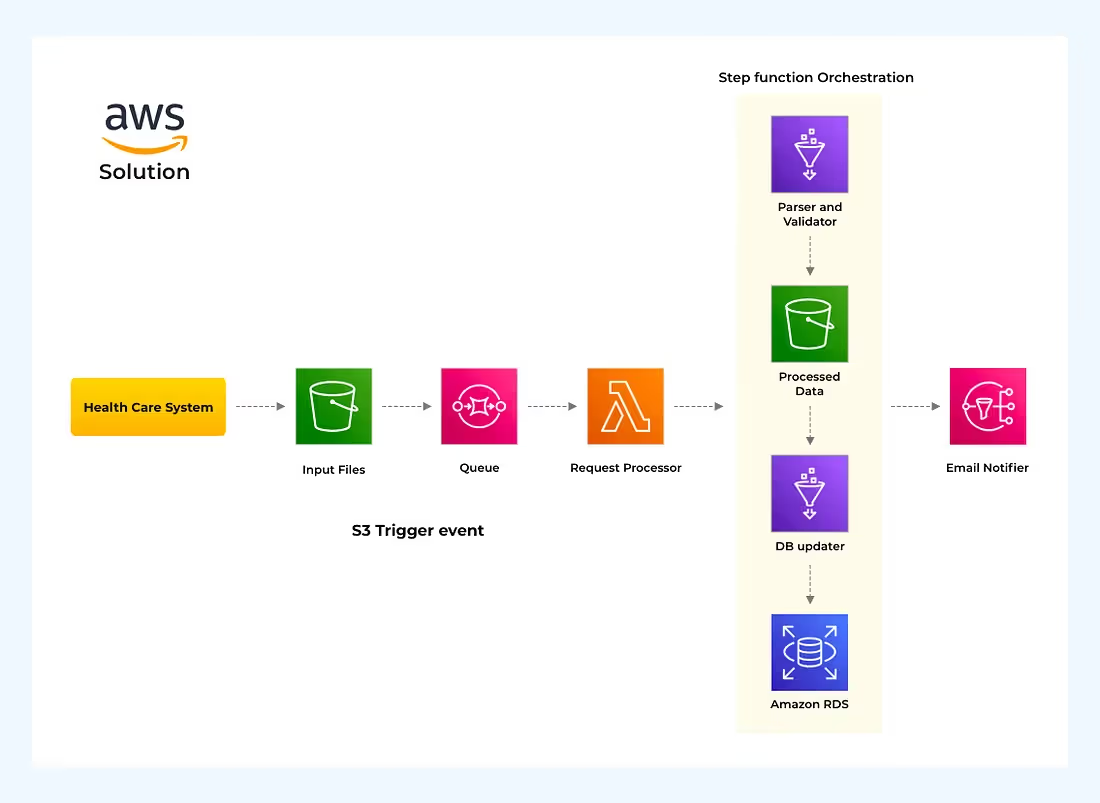

AWS Cloud Solution

To eliminate the data processing limitations due to the size of the database, here is the proposed infrastructure.

Following are the steps performed in the cloud-native solution.

- Using a batch loader, the input files are retrieved from the source system and fed into the Amazon S3 bucket.

- An event notification is set in the Amazon S3 bucket to look for incoming files and trigger a Lambda.

- The Lambda function reads the incoming file names from the S3 bucket and pushes it inside an Amazon SQS queue.

- The service orchestration layer has been implemented using Amazon Step Functions. Following are the four orchestration elements used by the Step function.

- AWS SQS

- AWS Glue

- AWS RDS

- AWS SNS

- AWS Step function takes necessary actions if there is a success or failure in the above-mentioned steps

- The main drawback of the Mirth-based architecture is scalability. In cloud-based architecture, we can scale up or scale down the processing job based on the input load.

- In the cloud-native architecture, Glue + Pyspark is used for validation and transformation of input files (i.e. all the javascript validations/transformations are converted to PySpark code for better scalability.

- Wherever there is an input message in AWS SQS, the Step function will invoke a Glue job. The role of Glue job is as follows.

- Parse the inbound recipient feed using a data dictionary and load it as a Spark Dataframe.

- Perform validations (which were earlier done by Mirth) on the input fields and segregate success and failure records.

- Apply transformation functions to the success records.

- Store the Success and Failure records in S3 as a flat-file.

- Update the AWS RDS (MySQL tables) with the success and failure information.

- Once the data has been successfully written to the database, use AWS SNS to send an email notification.

Benchmarks

To establish the right benchmarks for the upgraded system, we conducted a dry run using an inbound file with the following specifications

- File size — 1.5 GB

- Total number of records — 2 Million

- Success to Failure ratio — 80:20

- Number of DPUs used — 3 standard DPUs

Following are the metrics from the new design.

- File size: 1.5 GB

- Total number of records to be processed: 2 million

- Total time taken to process: 13 minutes

- Time taken for validation and transform: 6 minutes

- Time taken for updating DB: 7 minutes

With the cloud-based architecture (PySpark), the total time taken for processing a 1.5 GB file was around 13 minutes whereas with the older architecture (Mirth), it took almost a day to process those same records.

Advantages

Following are the advantages of using Cloud-based architecture.

Enhance Scalability:

- Since the process and database layers are separated, better scalability is achieved. Based on the input loads, the number of DPUs can be dynamically adjusted.

AWS Glue Capabilities:

- It can process the files in GBs in one shot and write the results to S3. There is no need of dividing the inputs into chunks.

Monitoring and Notification:

- Better monitoring and scalability is achieved using AWS SNS and AWS Step Functions. Any error/failure in the orchestration unit is gracefully handled using AWS Step functions (with retries and notification).

Like our articles on Healthcare? Then check out the following blogs.

- HIPAA compliant Data Lake on AWS – Tools, Techniques, and Checklists

- How to do Clinical Documentation Comprehension using Spark NLP: A step-by-step guide

- Addressing the Data Imbalance Problem in Healthcare

- Spark NLP for Healthcare 3.1.0 is here with new upgrades!

Conclusion

While Mirth has been a popular choice for healthcare data integration, its limitations in scalability and performance become evident when handling large datasets. Our transition from Mirth to Apache Spark not only addressed these challenges but also significantly improved processing times and operational efficiency.

By leveraging a cloud-native architecture, we successfully modernized our data ingestion platform, demonstrating that Spark can provide a robust solution for large-scale data transformation and validation. The results speak for themselves: what once took nearly a day can now be accomplished in mere minutes, allowing healthcare organizations to make timely, data-informed decisions.

As the demand for scalable solutions continues to rise, adopting technologies like Apache Spark is essential for organizations looking to stay competitive in the healthcare ecosystem. Transitioning to such advanced systems ensures better performance, flexibility, and resilience in the face of growing data complexities. Contact us to explore more ideal replacement solutions for your modernization needs.

%20Understanding%20the%20Role%20of%20Agentic%20AI%20in%20Healthcare.png)

%20AI%20in%20Software%20Development_%20Engineering%20Intelligence%20into%20the%20SDLC.png)

%20AI%27s%20Role%20in%20Enhancing%20Quality%20Assurance%20in%20Software%20Testing.png)

.png)

%20Tableau%20vs.%20Power%20BI_%20Which%20BI%20Tool%20is%20Best%20for%20You_.png)

%20AI%20in%20Data%20Quality_%20Cleansing%2C%20Anomaly%20Detection%20%26%20Lineage.png)

%20Key%20Metrics%20%26%20ROI%20Tips%20To%20Measure%20Success%20in%20Modernization%20Efforts.png)

%20Hybrid%20Cloud%20Strategies%20for%20Modernizing%20Legacy%20Applications.png)

%20Harnessing%20Kubernetes%20for%20App%20Modernization%20and%20Business%20Impact.png)

%20Monolith%20to%20Microservices_%20A%20CTO_s%20Decision-Making%20Guide.png)

%20Application%20Containerization_%20How%20To%20Streamline%20Your%20Development.png)

%20ChatDB_%20Transforming%20How%20You%20Interact%20with%20Enterprise%20Data.png)

%20Catalyzing%20Next-Gen%20Drug%20Discovery%20with%20Artificial%20Intelligence.avif)

%20AI%20Agents_%20Digital%20Workforce%2C%20Reimagined.avif)

%20How%20Generative%20AI%20Is%20Revolutionizing%20Customer%20Experience.avif)

%20Leading%20LLM%20Models%20Comparison_%20What%E2%80%99s%20the%20Best%20Choice%20for%20You_.avif)

%20Generative%20AI%20Strategy_%20A%20Blueprint%20for%20Business%20Success.avif)

%20Mastering%20LLM%20Optimization_%20Key%20Strategies%20for%20Enhanced%20Performance%20and%20Efficiency.avif)

.avif)

.avif)

.avif)

.webp)